FogAg: A Novel Fog-Assisted Smart Agriculture Framework for Multi-Layer Sensing and Real-Time Analytics of Water-Nitrogen Colimitations in Field Crops

Duration: August 3, 2024 – August 2, 2027

Total Award Amount: $827,534

Investigator(s):

Florida Atlantic University: Arslan Munir (Lead PI)

Kansas State University: Mitchell Neilsen (Co-PI), Naiqian Zhang (Co-PI), Paul R. Armstrong (Co-PI), and Rachel L. Veenstra Cott (Co-PI)

Purdue University: Ignacio Ciampitti (Co-PI)

Sponsor: U.S. Department of Agriculture (USDA) National Institute of Food and Agriculture (NIFA)

Award Abstract

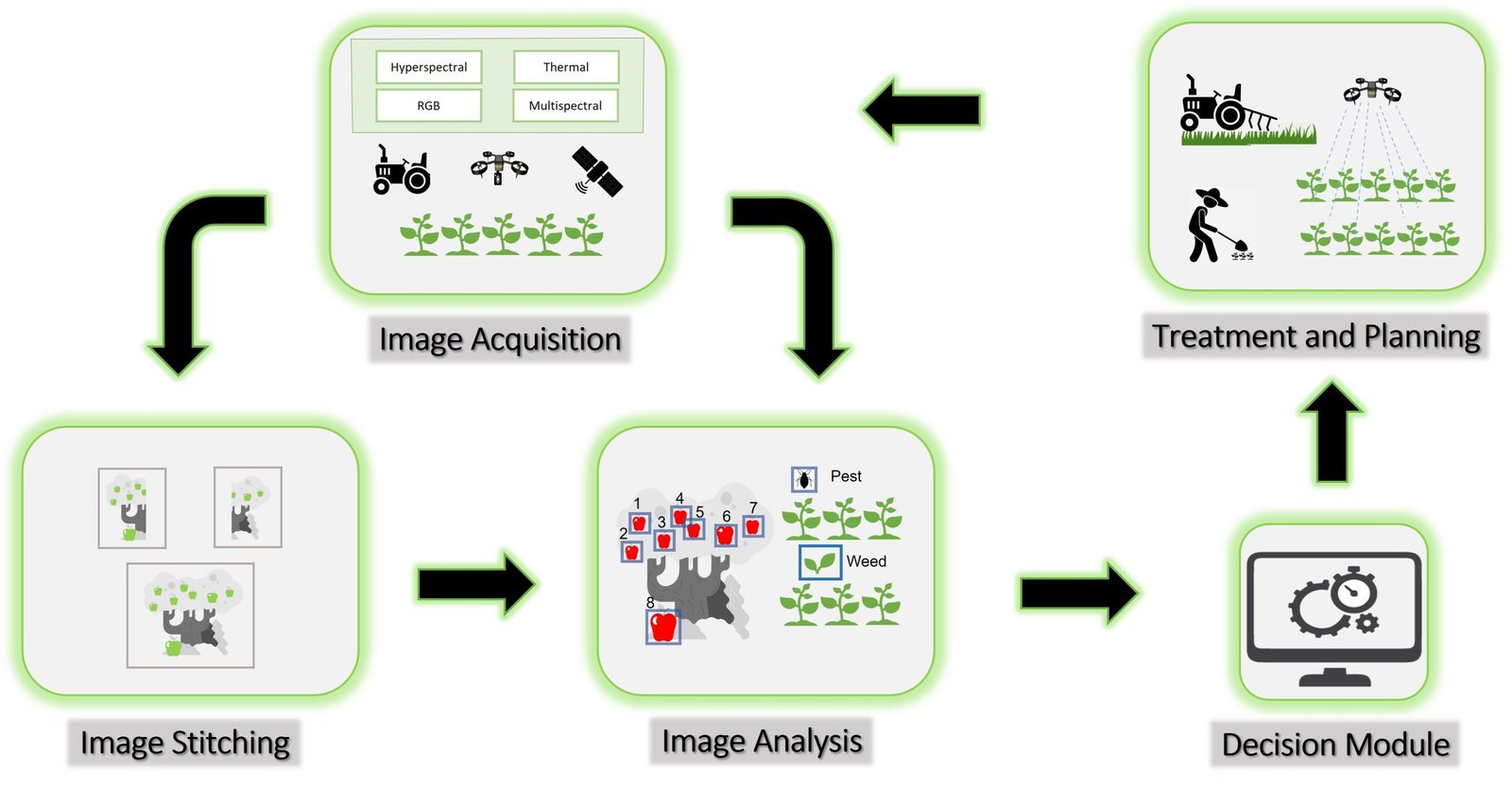

This research project aims to fill the gaps in contemporary smart agriculture (SmartAg) technologies by proposing a fog-assisted framework, FogAg, that integrates multi-layer sensing and real-time analytics of a plant-soil system to help solve the complex biological puzzle of linking the effect and interaction of two important crop inputs, viz., water and nitrogen, affecting crop yield. FogAg will also help provide near-real time diagnosis of crop stresses and translate the data into usable agronomic decisions not only to boost crop productivity but also to increase overall yield per unit of resource used in farming systems.

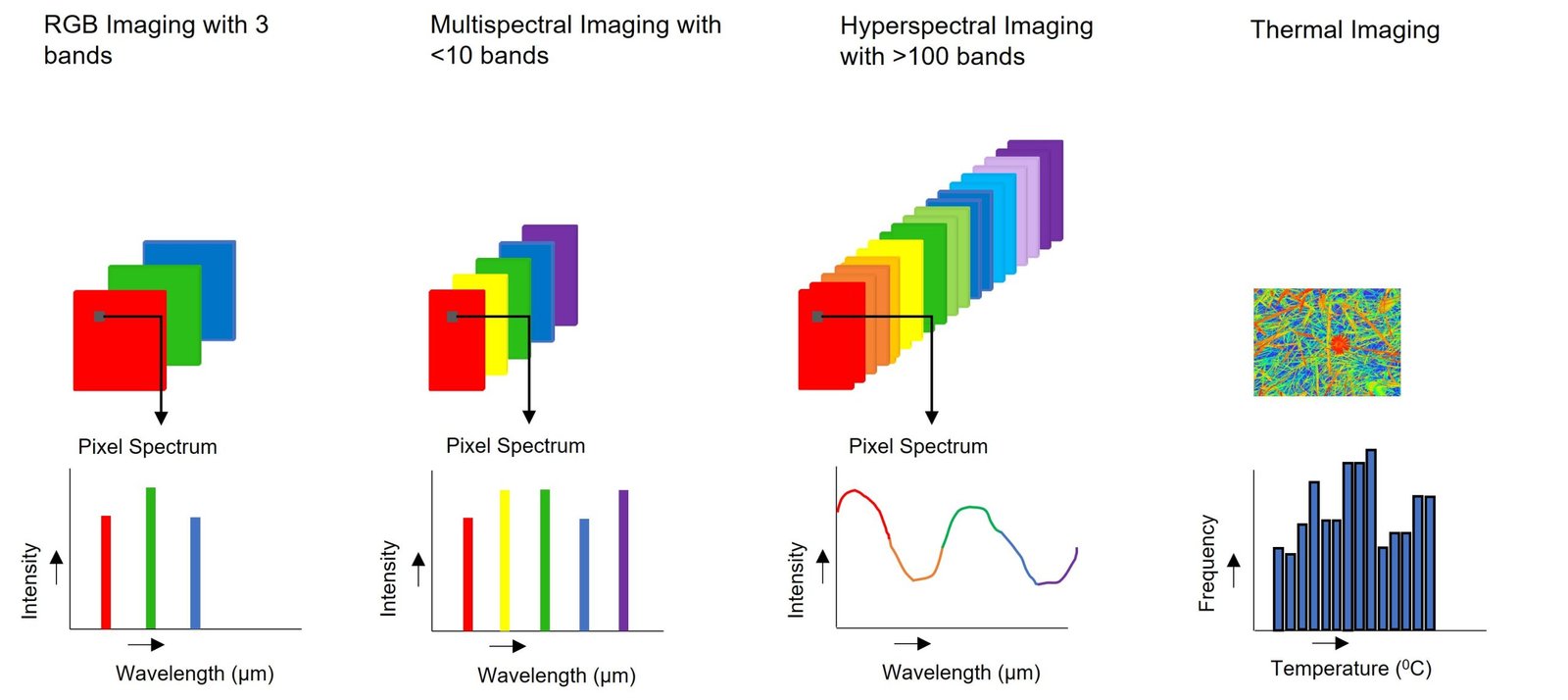

To meet the project goals and develop the proposed FogAg framework, scientific innovations in core CPS areas will be made on architecture, sensing, data analytics and machine learning, and modeling fronts. On the system-level CPS architecture front, the proposed FogAg architecture enables near real-time handling of latency-sensitive events in agriculture. On the device-level architecture, the project proposes Neuro-Sense, which will greatly support real-time signal/image processing by reconfiguration of data acquisition, computation, and communication parameters to assure energy-efficient performance for dynamically changing workloads. On the sensing front, the project proposes a sensing and imaging system for in-soil, above, below, and within plant canopy sensing. The proposed light emitting diode (LED) based multispectral imaging system will not only be economical but will also provide considerable flexibility by allowing different wavelengths to be used or substituted depending on measurement requirements as opposed to using filter-based methods common in multispectral systems or very expensive hyperspectral cameras. The proposed near-infrared (NIR) point measurement sensor will not only be an order of magnitude cheaper than a conventional diode array spectrometer but will also provide several options for interfacing and customizable optics. For in-soil sensing, a novel frequency response (FR)-based dielectric sensor and a mobile wireless sensor network are proposed that can simultaneously provide more accurate and real-time readings of soil nutrients and water content as compared to other commercially available soil sensors. On the data analytics and machine learning front, the proposed Neuro-Sense integrates a convolutional neural network (CNN) accelerator that will provide a higher throughput, utilization, area, and energy efficiency as compared to existing CNN accelerators by exploiting two-sided sparsity, binary sparse masks, and capability to look ahead into future computations to determine only the valid multiply and accumulate operations. The proposed three-tier data analytics FogAg framework will enable processing of sensing data at three levels: IoT, fog, and cloud. On the modeling front, tree-based predictive agronomic models will be developed that will utilize multi-layer sensed data along with historical yield and weather data to provide a variable-rate fertilizer and irrigation prescription map in near real-time to boost productivity and yield per unit of resource used in the farming system.

The proposed FogAg framework with spatial and temporal scalability will find many applications in rural and urban development. The proposed technologies will help in efficient usage of resources and improvement in crop health, quality, and yield thus creating significant social and economic benefits in food security. The joint consideration of water-nitrogen colimitations will have positive environmental impacts by reducing the nitrogen footprint and environmental pollution. The investigators will incorporate significant research results obtained during this project into undergraduate and graduate education.

Publications

- Sumaira Ghazal, Arslan Munir, and Waqar S. Qureshi, “Computer vision in smart agriculture and precision farming: Techniques and applications”, Elsevier KeAi Artificial Intelligence in Agriculture, vol. 13, pp. 64-83, September 2024.

- Sumaira Ghazal, Arslan Munir, and Waqar S. Qureshi, “Computer vision in smart agriculture and precision farming: Techniques and applications”, Elsevier KeAi Artificial Intelligence in Agriculture, vol. 13, pp. 64-83, September 2024. Download

- Kai Zhao and Mitchell Neilsen, “Contour detection of seeds based on traditional and convolutional neural network (CNN) based algorithms”, to appear in Proceedings of the 28th International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV), July 22-24, 2024.

- Sumaira Ghazal, Namratha Kommineni, and Arslan Munir, “Comparative Analysis of Machine Learning Techniques Using RGB Imaging for Nitrogen Stress Detection in Maize”, under review in AI, 2024. Download

Datasets

Maize Nitrogen Stress Dataset

Description: The maize nitrogen stress dataset captures data for monitoring nitrogen stress in maize during various developmental stages. This data set is collected from the Kansas State University North Farm Agronomy Education Center (AEC). The total area of the plot is approximately 1875 square feet, divided into 12 rows. The plot is further split into two halves: Nitrogen Surplus Rows and Nitrogen Deficient Rows. Each half is clearly marked to maintain an accurate record of the area with specific nitrogen concentrations.

The data set includes two types of images: RGB images captured with a Canon SX530 HS 16MP camera and multi-spectral images captured with a Micasense RedEdge MX camera, covering wavelengths from 400 nm to 900 nm.

Codes

Coming Soon